Learn about the generative AI revolution and why clear university policies are essential for student success and fairness.

Oleksandra Zhura

Oleksandra Zhura

The conversation around AI in education has been dominated by assumptions rather than evidence. Headlines warn of widespread cheating, while institutions scramble to implement detection software and blanket bans. But what do educators actually think about student AI use when you sit down and ask them directly?

New research from interviews with college educators reveals that while only 7% allow unrestricted AI use, 71% permit AI with guidelines – showing teachers increasingly embrace AI as a learning tool when used ethically. The key factors for acceptance: transparency, engagement with sources, and maintaining student agency in the learning process.

Over the past two months, we conducted in-depth interviews with 40 college instructors across writing-heavy disciplines – from composition and literature to business communications and social sciences. These conversations, lasting 45 minutes each, revealed a nuanced landscape that challenges common misconceptions about faculty attitudes toward AI.

These findings align with broader institutional trends documented by EDUCAUSE[1], which found that 73% of institutions adopt permissive or neutral rather than restrictive AI policies. Far from the resistance narrative that dominates media coverage, we found educators increasingly willing to embrace AI as a learning tool – when certain conditions are met.

This research was conducted by Litero AI in August-September 2025. The methodology included a quantitative survey of 42 educators across multiple institutions and disciplines.

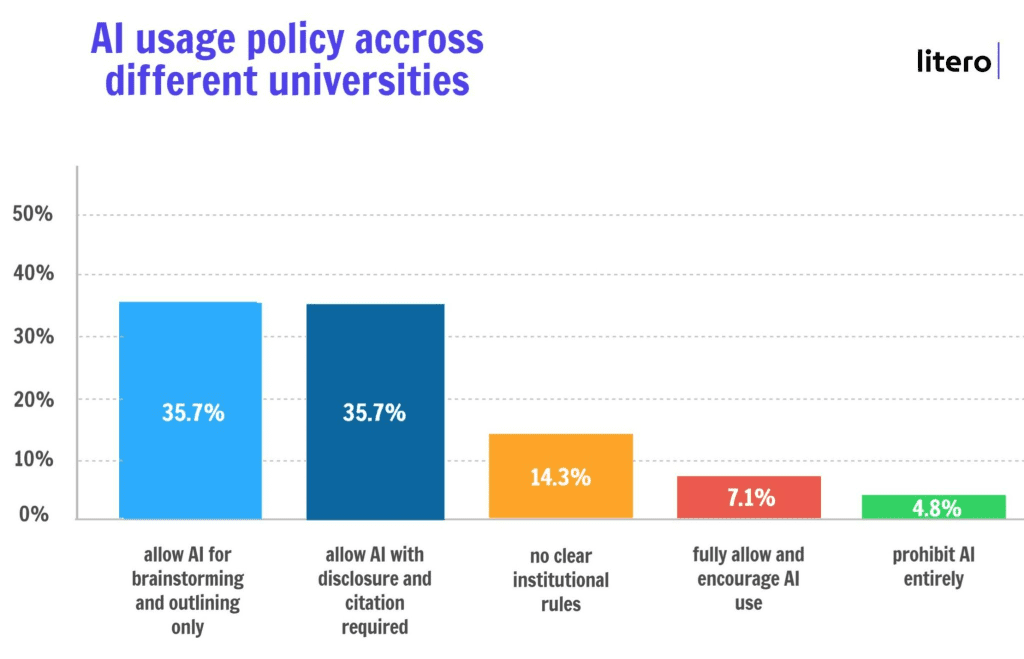

Survey data from 42 educators reveal a more permissive landscape than typically reported:

This distribution contradicts claims of widespread faculty resistance. The Digital Education Council’s 2025 global survey[2] of 1,681 faculty from 52 institutions found similar patterns, with 57% preferring “AI permitted with disclosure and specific instructions” for student assignments.

“We’ve moved beyond the binary thinking about AI – that you’re either for it or against it,” explains Rachel Kolman, who teaches writing at Seattle Central College and creates AI policies for her students. “I want my students to use AI to become better writers, not to avoid writing altogether. When more than 50% of a paper is AI-generated, we will have a conversation about it, and then I’ll give a student the chance to rewrite, but that rarely happens once students understand the boundaries.”

This sentiment reflects a broader shift we observed: educators moving from reactive prohibition to proactive integration. The institutions and instructors showing the most success have moved beyond detection and punishment toward education and authentic assessment design.

When we asked educators what would make them comfortable recommending an AI writing tool to students, transparency emerged as the overwhelming priority. Split-screen interfaces that show AI contributions alongside student work were consistently praised across interviews.

“I don’t mind students using AI as long as it’s disclosed with evidence,” says Reggie Clark, a high school English teacher and adjunct college professor from Maine. “If you used AI for planning and outlining, show me screenshots of that process or your AI usage log. When I can see what the student wrote versus what AI suggested – that’s transparency done right.”

This emphasis on process over product represents a fundamental shift in how academic integrity is conceptualized. Rather than focusing solely on the authenticity of final submissions, educators increasingly value evidence of student engagement and critical thinking throughout the writing process.

The most trusted approaches include:

Christine, who teaches university-level history and writing courses, has abandoned AI detection tools entirely:

“Our institution gave up on detectors – it wasn’t really productive. AI is not going anywhere, so instead of playing detectives, we have to figure out intelligent ways to make it work for us and for the learning process, as opposed to pretending nobody’s using it. The focus should be on building skills, not catching cheaters.”

One of the clearest patterns in our interviews was the distinction educators make between mechanical and engaged AI use for research. While 78% of instructors welcome AI assistance in finding sources, they unanimously reject what several called “bibliography automation.”

Jane, who teaches in the UK and has successfully used AI tools for literature reviews, explains the difference:

“AI that helps students understand what they’re looking for, decode academic terminology, or identify research gaps? That’s actually teaching them to be better researchers. But AI that just spits out a complete work without engagement? That’s not helpful; students won’t learn from what they’ve written this way.”

Her approach includes requiring declarations for AI use and demonstrating how to decode academic terms – a practice that has proven successful in maintaining research integrity while leveraging AI capabilities.

Successful research integration requires what educators term “engagement gates” – requirements that students demonstrate understanding and interaction with AI-generated sources before proceeding to drafting. These include:

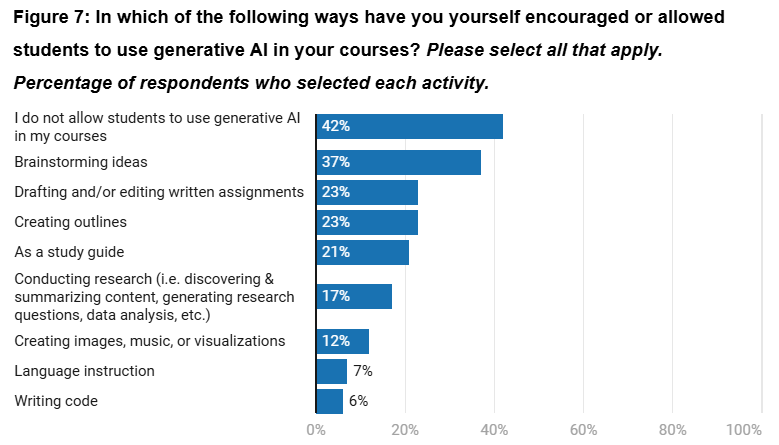

The Ithaka S+R 2024 study[3] of 2,654 faculty members supports this approach, finding that only 42% of instructors completely prohibit student use of generative AI, while the majority focus on establishing appropriate use guidelines rather than blanket restrictions.

Source: Ithaka S+R

Perhaps our most concerning finding relates to AI “humanization” tools designed to make AI-generated text appear more human-written. Multiple educators reported awareness that students use these tools specifically to evade AI detection.

Amy Hartzell, a Communications Professor who teaches at a university that generally bans AI unless instructor-approved, has observed this pattern firsthand:

“I establish baseline writing samples through handwritten work early in the semester, so I can track changes in student voice. And a lot of students use AI humanizers, which defeats the educational purpose entirely.”

Rather than relying on detection software – which 73% of our interviewed educators distrust due to false positive concerns – most instructors prefer pattern recognition and baseline comparison. They establish student writing voices early through in-class writing samples and track dramatic stylistic changes over time.

“Humanizer tools are particularly problematic because they’re designed for evasion rather than learning,” notes Reggie Clark, reflecting a sentiment shared across interviews. “The AI tools that work ethically are the ones that make the collaboration visible, not invisible.”

When it comes to AI-assisted grading and feedback, educators draw clear lines between supportive and replacement functions. The overwhelming preference is for AI that explains the “why” and “how” of improvements rather than simply providing corrections.

Christine has successfully integrated AI into her feedback workflow:

“I use AI to accelerate first-round feedback, but I always teach students to verify the suggestions. Rubric-based feedback that shows students not just what’s wrong, but how to fix it – that’s where AI becomes pedagogically valuable.”

Successful implementations include:

The approach aligns with research from the Digital Education Council[4] showing that 61% of faculty have used AI in teaching, though 88% use it minimally, indicating careful rather than wholesale adoption.

A surprising finding from our research was the rapid adoption of AI citation practices. While initially resistant, 84% of interviewed educators now require students to cite AI tools when used, treating them as academic sources rather than invisible assistance.

Natalie Sappleton, who teaches business and social work at Manchester Metropolitan University, has implemented comprehensive citation requirements:

“Students must cite the prompts and tools they use on their reference pages. We enforce this through our code of conduct with graded penalties, but once students understand the expectation, compliance is high.”

Amy Hartzell takes a similar approach: “I require APA citations for AI use, just like any other source. This opens up important conversations about source reliability and the difference between information and analysis.”

The most sophisticated approaches include citation of specific prompts used, acknowledgment of the extent of AI contribution, and reflection on the appropriateness of AI assistance for different parts of the assignment.

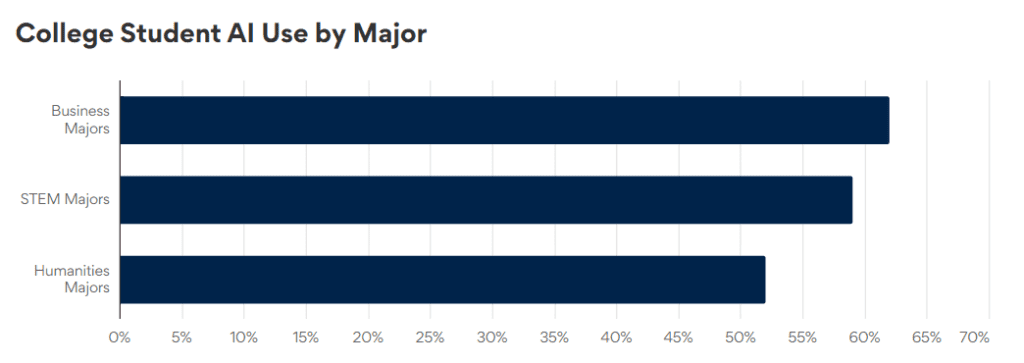

Our research revealed significant disciplinary variations in AI acceptance and integration strategies, consistent with findings from BestColleges[5] showing that STEM fields demonstrate 40-60% higher acceptance rates than humanities disciplines.

Source: BestColleges

STEM educators tend to view AI as:

Humanities educators show more concern about:

However, both groups share common ground on core principles: student agency, transparency, and learning enhancement over task completion.

Gwooyim Gyat, who teaches ethics and justice studies with a focus on Indigenous and marginalized communities at Royal Roads University, articulates the balance:

“Grammar help, clarification, outlines, and literature search are acceptable. It becomes problematic when AI eclipses the student’s voice or critical thinking. We need to normalize transparent citation while ensuring equity – not all students have the same digital literacy background.”

When we asked educators about student attitudes, a consistent theme emerged: students generally want to use AI ethically but need clear guidance on appropriate boundaries.

“I don’t think my students are trying to cheat – they’re just trying to use AI as a crutch for their learning,” observed Rachel Kolman. “When I provide clear guidelines about what kinds of AI help are appropriate for each assignment, compliance rates are excellent. The key is involving students in policy creation rather than imposing rules from above.”

This observation aligns with broader research on student preferences. The King’s Business School case study[6] revealed that 74% of students failed to declare AI use despite mandatory requirements, suggesting that overly restrictive policies may drive underground use rather than promoting ethical practices.

Educators reported that students prefer educational approaches over punitive ones, with 89% noting increased honesty when AI policies focus on learning outcomes rather than detection and punishment.

Perhaps the most significant change we observed is the evolution of assessment methods. Educators are rapidly moving beyond traditional essay assignments toward formats that naturally integrate AI while maintaining learning objectives.

Jeremy DeLong, Associate Professor of Philosophy, who maintains strict AI bans in his courses, acknowledges the challenge:

“I use replication tests – prompting AI with the same assignment requirements and comparing outputs – to catch violations. But I recognize this approach isn’t sustainable long-term. The future probably lies in redesigning assignments rather than policing them.”

Emerging assessment approaches include:

Hamza Javaid, who teaches Business Management at Canterbury Christchurch University and uses a daily stack of AI tools, including Perplexity for research and Gamma for presentations, advocates for visible workflow documentation:

“Students should be able to show their planning process, their iterations, their decision-making. That’s what demonstrates learning, not just the final product.”

Our research identified key characteristics of successful institutional AI policies, supported by EDUCAUSE[7] findings that only 23% of institutions had AI-related acceptable use policies in place as of 2024, but those that did showed remarkable sophistication.

Clear guidelines with flexibility: Institutions providing specific examples while allowing course-level customization see higher compliance rates and faculty satisfaction.

Education over enforcement: Universities emphasizing AI literacy training rather than detection software report more positive outcomes.

Faculty involvement: Policies developed with significant faculty input through shared governance show greater adoption and effectiveness.

Regular updates: Institutions with quarterly or semester-based policy reviews adapt more successfully to technological changes.

The most successful institutions have moved beyond the initial panic response documented in late 2022, when ChatGPT’s release created immediate disruption across higher education, leading to hasty bans and network blocks.

These insights reveal specific opportunities for AI writing platforms to better serve educational needs. Across interviews, educators consistently emphasized the need for transparency features that make AI contributions visible to both students and instructors.

“Tools that can show exactly what the AI contributed versus what the student wrote – that addresses my primary concern about academic integrity,” explains Reggie Clark, whose positive experience with transparent AI writing platforms like Litero AI has shaped his recommendations to students.

Other high-priority features include:

As Jane from the UK noted after successfully using AI tools for literature review work:

“The best AI writing tools won’t be the ones that can’t be detected – they’ll be the ones that make learning visible and support proper academic practices like citation and source engagement.”

Our research suggests higher education is at a critical juncture. Institutions that continue to rely on blanket restrictions risk losing students to more AI-forward competitors while missing opportunities to prepare graduates for AI-integrated workplaces.

The evidence strongly supports nuanced, educational approaches that embrace AI’s potential while maintaining academic integrity through transparency, skill development, and authentic assessment design.

As more educators experiment with AI integration, several trends are emerging:

Collaborative policy development involving students, faculty, and administration produces more effective and sustainable approaches than top-down mandates.

Assignment redesign that naturally incorporates AI while preserving learning objectives shows more promise than AI-proofing strategies.

Skill development focus on AI literacy, prompt engineering, and critical evaluation of AI output prepares students for professional contexts.

Process documentation that makes thinking visible benefits both learning assessment and academic integrity verification.

The conversation has shifted from “How do we stop students from using AI?” to “How do we teach students to use AI responsibly and effectively?” This represents not just a policy change, but a fundamental reimagining of what learning looks like in an AI-integrated world.

Digital Education Council research[8] shows that 75% of faculty who regularly use AI tools believe students need AI skills for professional success, supporting this pedagogical evolution.

Gwooyim Gyat captures the broader implications:

“AI should be a tool to build thinking, not replace it. When we frame it that way – as support for learning rather than automation of learning – both students and faculty are more comfortable with integration.”

For AI writing tools, this creates both opportunity and responsibility. The platforms that will earn educator trust and recommendation are those that prioritize learning enhancement over task completion, transparency over invisibility, and student agency over automation.

The 7% who allow unrestricted AI use may grab headlines, but the 58% who thoughtfully integrate AI with guidelines represent the true future of educational technology – one where human intelligence and artificial intelligence collaborate in service of learning.

This research was conducted by Litero AI through surveys and interviews with college educators from August-September 2025. Litero AI provides transparent, ethical writing assistance that prioritizes student learning and maintains academic integrity through clear authorship tracking and educational scaffolding.

Still can’t get your essay right? Try Litero AI today

Cite this article

We encourage the use of reliable sources in all types of writing. You can copy and paste the citation manually or press the “Copy reference” button.

Zhura, O. (2025, September 17). What college teachers really think about AI use by students: insights from deep interviews with college educators. Litero Blog. https://litero.ai/blog/what-college-teachers-really-think-about-ai-use-by-students/

Oleksandra is a content writing specialist with extensive experience in crafting SEO-optimized and research-based articles. She focuses on creating engaging, well-structured content that bridges academic accuracy with accessible language. At Litero, Oleksandra works on developing clear, reader-friendly materials that showcase how AI tools can enhance writing, learning, and productivity.

Learn about the generative AI revolution and why clear university policies are essential for student success and fairness.

AI assistance reduced writing time by 70% while maintaining academic quality standards. Read the full findings.