Litero Writing Lab: independent study on AI-assisted academic writing

Maria Ivakhnenko

Maria Ivakhnenko

Download the complete study as PDF for easy sharing and reference:

Executive summary

In August 2025, Litero.ai ran an independent comparative study on how AI writing assistance impacts student writing quality, time efficiency, and academic workflow.

Study design:

- 20 university students wrote identical 3-page essays on historical perspectives of terrorism and symbolism.

- Half used only traditional methods (control group), half used Litero’s AI platform (AI group).

- Independent educators graded all submissions blindly using standardized rubrics.

Key findings:

- Time efficiency: AI-assisted students finished 70% faster (average 1.8 hours vs. 6 hours).

- Quality: Grades were comparable (B- average with AI vs. B average with traditional methods).

- Workflow shift: AI users focused on verification and refinement, while traditional writers spent most effort on content generation and structure.

- Student experience: AI-assisted writers reported steady confidence and less anxiety; traditional writers described breakthrough moments after overcoming major obstacles.

- Integrity: Both groups maintained academic standards when proper guidelines were followed.

Conclusion:

AI support significantly reduces writing time while preserving academic quality. The findings provide evidence-based insights for educators considering how to integrate AI tools into higher education.

Study design & methodology

Participants: 20 current university students across various disciplines, recruited from freelance writing platforms and randomly assigned to control or AI-assisted groups.

Task: All participants wrote a 3-page academic essay analyzing “how historical perspectives of terrorism have evolved over time, highlighting the role and significance of symbolism in terrorist motivations.” No specific sources were required; students conducted independent research.

Conditions:

- Control group (10 students): Used only traditional tools (Word, Google Docs, standard research methods). No AI assistance permitted.

- Litero group (10 students): Used Litero AI platform for drafting, research assistance, citation support, and editing. Students were required to use Litero for at least 80% of their writing process.

Blinded evaluation: All submissions were anonymized and formatted identically before grading. Two independent educators evaluated papers using standardized academic rubrics, unaware of which group each essay came from.

Data collection: Students reported time spent, workflow challenges, moments of frustration and confidence, plus detailed process documentation.

Key research questions

- Quality impact: Does AI writing assistance affect the academic quality of student work when evaluated by independent educators?

- Time efficiency: How does AI assistance change the time investment required for academic writing tasks?

- Workflow transformation: What aspects of the writing process change when students have access to AI tools, and how do students experience these changes?

- Academic integrity: Can AI-assisted writing maintain academic standards when proper guidelines are followed?

Methodology transparency

Compliance Verification: To ensure group integrity, the control group submitted version history screenshots proving no AI use, while the Litero group provided platform usage screenshots documenting their AI-assisted workflow throughout the writing process.

Bias Prevention: The study employed random assignment to groups with identical prompts and requirements for all participants. Complete anonymization occurred before grading, with independent evaluators who had no stake in the results reviewing all submissions without knowledge of group assignment.

Academic Rigor: All participants completed standard 3-page essays with proper citation requirements under university-level research expectations. Professional rubric-based evaluation ensured consistent assessment, with standardized formatting applied to all submissions before the blind review process.

Study limitations & scope

This study examined a single writing task (analytical essay) over a short timeframe. Results reflect the specific context of terrorism/symbolism analysis and may vary across different academic disciplines, assignment types, or student populations. The study focuses on immediate workflow and quality impacts rather than long-term learning outcomes.

Study results: traditional vs AI-assisted academic writing

Teacher feedback

Litero group: AI-assisted academic writing

The AI-assisted papers earned a B- average with solid foundational strengths.

Strengths: Most achieved clear, focused theses and logical organization, with one standout paper earning praise for “exceptionally well done” writing and in-depth analysis.

Weaknesses: Teachers noted opportunities for improvement in providing more specific evidence (dates, concrete examples), strengthening connections between points, and following detailed formatting requirements—particularly the missing source evaluation sections that most students overlooked.

Control group: traditional academic writing

The traditional writing group earned a B average with strong organizational skills and varied execution.

Strengths: Several papers featured excellent, concise theses and thorough evidence that supported their arguments well, with solid organization and flow throughout.

Weaknesses: Some struggled with unclear introductions, missing theses entirely, grammatical errors, and awkward phrasing that occasionally made meaning difficult to follow. Like the AI-assisted group, most also missed the required source evaluation methodology section.

Student own reflection

Control group: traditional academic writing

Primary pain points:

“My writing process was challenging in that I had to create a bridge between the historical narrative and the symbolism within the given limit of three pages.”

- Content organization within constraints

- Source accessibility barriers – “It was difficult to locate concise but authoritative sources that were not behind paywalls”

- Manual research refinement – Students had to learn better search strategies through trial and error

- Paragraph restructuring – Extensive manual rearrangement of ideas and flow

Typical workflow:

- Heavy reliance on traditional academic databases (University Library, ScienceDirect, Oxford Academic)

- Extensive time spent on source evaluation and credibility assessment

- Manual organization and reorganization of ideas

- Significant struggle with initial drafting and connection of concepts

Confidence moments:

- “When I found the Oxford University Press entry… I felt confident in organizing the symbolism part”

- “When I rearranged my main points, I finally felt confident” (confidence came after overcoming structural challenges)

Litero group: AI-assisted academic writing

Primary pain points:

- Source verification – “Verifying sources” and ensuring relevance became the main focus

- Citation accuracy – “Checking sources and making sure citations were correct”

- Quality control rather than content creation

Transformed workflow:

- Rapid initial drafting with AI assistance

- Shift from creation struggles to verification and refinement

- Streamlined research integration

- Focus on quality control rather than content generation

Confidence moments:

“I did not feel any frustration or anxiousness”

- “The process was overall easy”

- “The flow was natural”

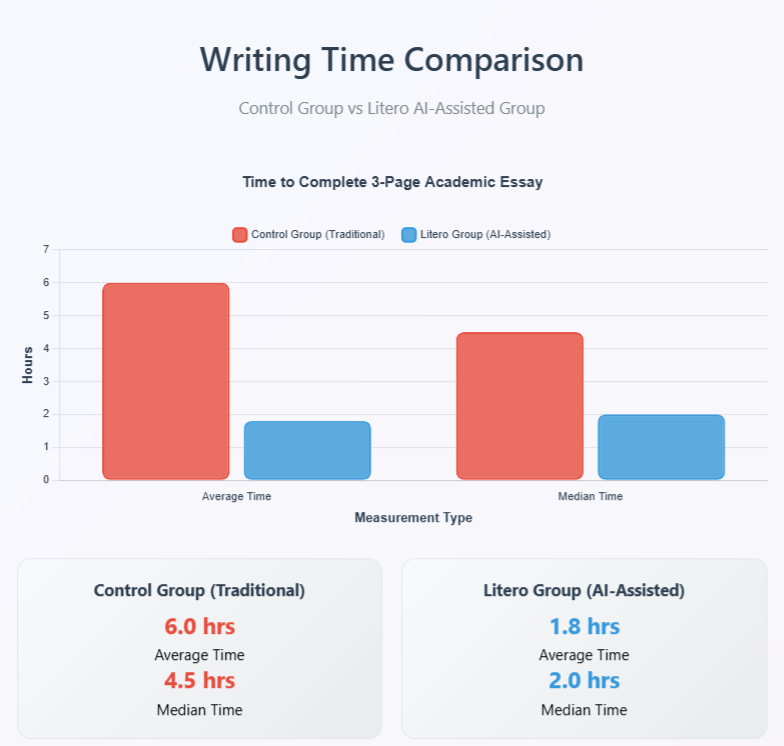

Time efficiency: reduction in writing hours

Control Group (Traditional methods):

- Average time: 6 hours

- Median time: 4.5 hours

- Range: 2-12 hours (a few students worked marathon 12-hour sessions)

Litero group (AI-assisted):

- Average time: 1.8 hours

- Median time: 2 hours

- Range: 30 minutes – 4 hours

Key finding: Litero users completed assignments 3x faster on average, with the median student finishing in half the time.

Cognitive load shift: from creation to curation

The most striking difference was not just time savings, but a fundamental shift in where students spent their mental energy:

Control group: Struggled with content creation, organization, and basic research execution

Litero group: Focused on verification, quality assessment, and academic integrity

This represents exactly the kind of cognitive elevation that ethical AI should provide – moving students from mechanical tasks to higher-order thinking about source quality, argument strength, and academic rigor.

Confidence patterns: struggle vs flow

Control group confidence: Student confidence emerged only after overcoming significant obstacles. They gained confidence through problem-solving and breakthrough moments, achieving flow states despite the challenging writing process rather than because of it.

Litero group confidence: Students maintained steady confidence throughout the entire process due to reduced friction points. Flow states emerged naturally from the streamlined workflow, with minimal anxiety or frustration reported across all participants.

Study findings and implications

This comparative study reveals answers to fundamental questions about AI-assisted academic writing, demonstrating both significant benefits and areas for continued development.

Quality outcomes remained largely consistent between traditional and AI-assisted approaches. Independent educators awarded similar grades (B average for traditional methods, B- for AI-assisted), with both groups demonstrating comparable strengths in organization and argumentation. While the AI-assisted group scored slightly lower on average, both groups faced similar challenges with evidence specificity and instruction compliance, suggesting that core academic skills remain essential regardless of the tools used.

The efficiency gains were dramatic and clear. Students using Litero completed identical assignments in 70% less time—averaging 1.8 hours versus 6 hours for traditional methods. This represents a fundamental shift in cognitive load allocation: where traditional writers struggled with content generation and initial organization, AI-assisted students focused their energy on source verification, argument refinement, and quality assessment. This cognitive elevation—from mechanical creation to critical curation—suggests AI tools can redirect student effort toward higher-order analytical tasks.

The transformation in student experience was equally significant. Traditional writers described breakthrough moments that came after overcoming substantial obstacles, building confidence through productive struggle. AI-assisted writers maintained steady confidence throughout, experiencing natural flow states with minimal frustration. This shift represents a meaningful reduction in academic anxiety while maintaining academic rigor.

Academic integrity was successfully maintained when students followed proper guidelines. Both groups produced legitimate academic work that met institutional standards, demonstrating that AI assistance, when properly implemented, supports rather than compromises academic honesty.

These findings suggest that AI writing assistance offers substantial efficiency benefits while maintaining academic standards, though continued refinement of both tools and implementation strategies can further optimize learning outcomes.

Looking ahead

These findings suggest that educators need not fear AI writing tools, but rather develop frameworks for their effective integration. The priority is to design assignments and rubrics that combine AI’s efficiency with the depth and specificity required for real learning. Instead of banning AI, institutions should teach students when and how to apply it responsibly.

The future of academic writing will be a collaboration between human judgment and AI capability, maintaining rigor while reducing unnecessary friction.

Data availability

Anonymized student responses and complete educator feedback used in this study are available upon request for research verification and academic review.

This report was produced using AI-assisted research tools to accelerate data collection and analysis. All findings were then manually curated, verified, and interpreted by human researchers.

Cite this article

We encourage the use of reliable sources in all types of writing. You can copy and paste the citation manually or press the “Copy reference” button.

Ivakhnenko, M. (2025, August 22). Litero Writing Lab: independent study on AI-assisted academic writing. Litero Blog. https://litero.ai/blog/litero-writing-lab-independent-study-ai-assisted-academic-writing/

Was this article helpful?

Maria Ivakhnenko

Product Marketing SpecialistMaria is a Product Marketing Specialist at Litero who blends creativity, empathy, and strategy to help students and educators make the most of AI writing tools. With a background in product storytelling and user research, Maria bridges the gap between technology and human insight — shaping Litero’s brand voice, user experience, and community.

-

Academic writing copilot built for students

-

10M+ sources from academic libraries

-

Built-in detectors with transparency tools

-

Context-aware suggestions as you write